How does it work?

Jobs

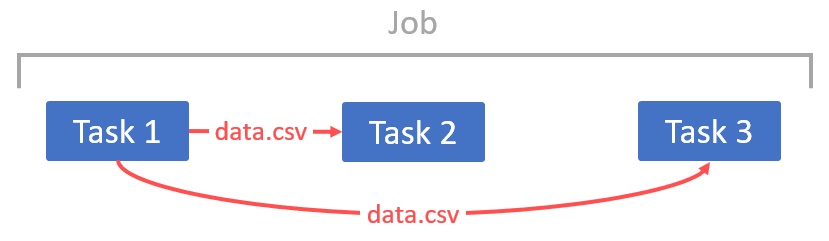

Product-Live Data Factory allows to create Jobs. A Job is a workflow that allows to create imports, exports and transformations of data stored in Product-Live or manipulate files and data that are stored outside of Product-Live. These Jobs consist of Tasks that are chained together.

Tasks are elementary actions, for example: FTP Get (allows to get a file on a sFTP/FTP server), CSV to XML (allows to convert a CSV file to a XML file which is easier to manipulate)...

An example of creating a Job for daily importing data from your ERP to Product-Live would be as follow:

- FTP Get Get the latest CSV file on a FTP server.

- CSV to XML Transform the CSV to a XML format.

- Transform XLST Transform the XML to the format expected by Product-Live

- Import Items Import products to Product-Live

This Job can be executed manually or periodically.

Pipelines

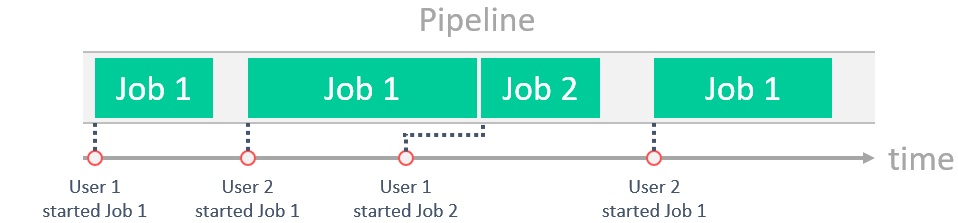

Jobs are executed in Pipelines with the rule first in first out. The execution time of a Job is depending on the input, in the example below Job 1 export items and send it to a SFTP, its execution time is depending on the number of items the user has selected.

A Job must be associated with one and only one Pipeline.

By default you have one Pipeline on your account, but you can purchase as many Pipelines as you want, if for example:

- You want to isolate Jobs: you want that the Jobs of your Sandbox's can not have slowdown effects on Production's Jobs, then you need a Pipeline for your Sandbox's Jobs and another Pipeline for your Production's Jobs.

- You need that a Job must be executed at a precise time: you need a dedicated Pipeline for this Job.

For any question regarding the Data Factory platform, do not hesitate to contact the Product-Live team.